Accessing LLM Configuration

LLM Configuration settings are applied globally for your entire organization. You can access and modify these settings by navigating to: codegen.com/settings/model This central location ensures that all agents operating under your organization adhere to the selected LLM provider and model, unless specific per-repository or per-agent overrides are explicitly configured (if supported by your plan).

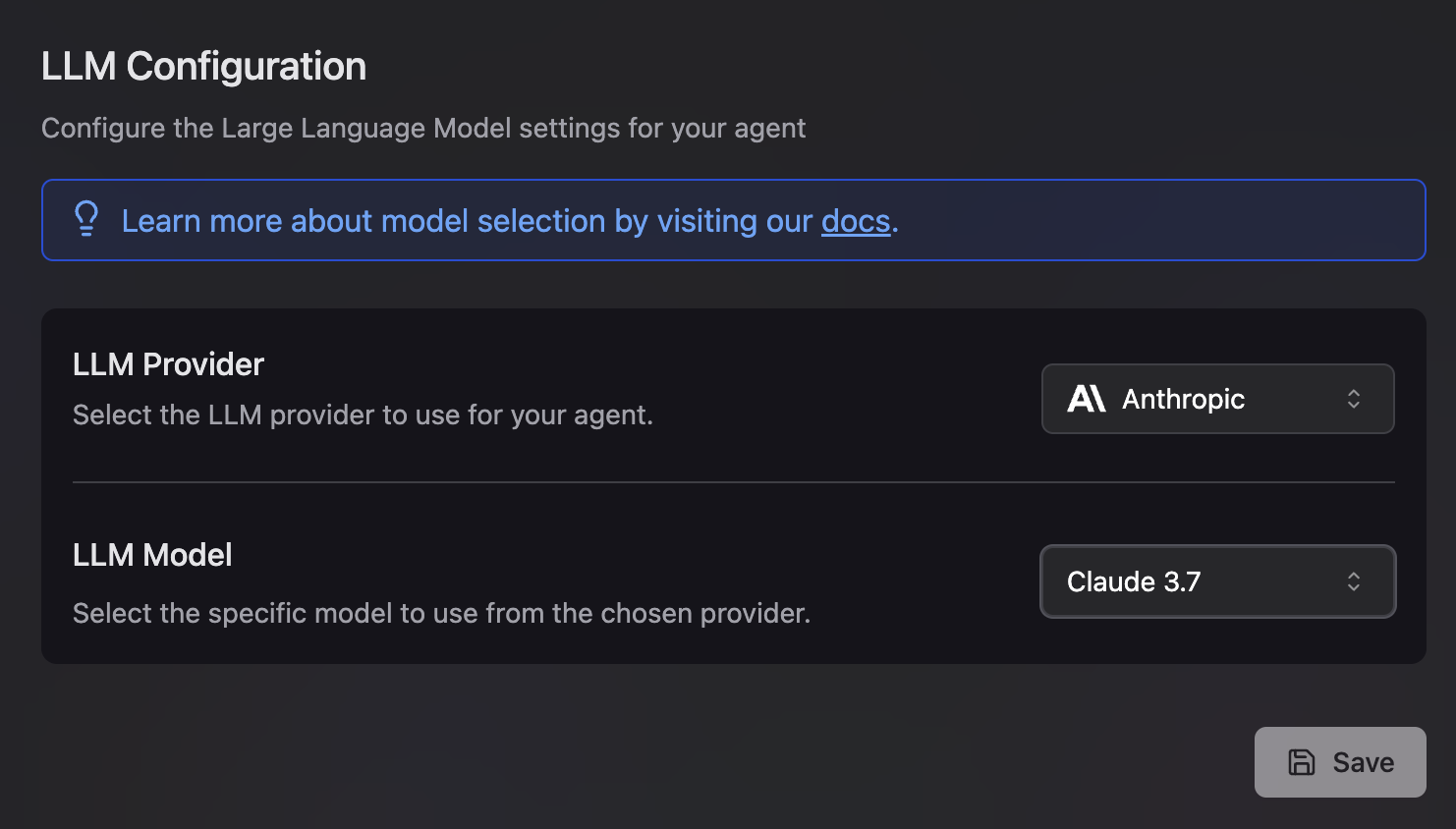

LLM Configuration UI at codegen.com/settings/model

- LLM Provider: Select the primary LLM provider you wish to use. Codegen supports major providers such as:

- Anthropic

- OpenAI

- Google (Gemini)

- LLM Model: Once a provider is selected, you can choose a specific model from that provider’s offerings (e.g., Claude 3.7, GPT-4, Gemini Pro).

Model Recommendation

Custom API Keys and Base URLs

For advanced users or those with specific enterprise agreements with LLM providers, Codegen may allow you to use your own API keys and, in some cases, custom base URLs (e.g., for Azure OpenAI deployments or other proxy/gateway services).- Custom API Key: If you provide your own API key, usage will be billed to your account with the respective LLM provider.

- Custom Base URL: This allows Codegen to route LLM requests through a different endpoint than the provider’s default API.

The availability of specific models, providers, and custom configuration

options may vary based on your Codegen plan and the current platform

capabilities.